Artificial Intelligence has become a popular topic in the past decade. The popularity of humanoid robots definitely plays a big part in this. However, this idea of Artificial intelligence used to be a topic strictly for scientists in the 90’s. Knowing this contrast, I believe that articles on Artificial Intelligence from the 90’s contain more technical terms than the ones in 2010’s. Moreover, I would not be surprised if the 2010’s articles have lower percentages of AWL and GSL 2nd 1000 words than the 90’s. Thus, more informal. Therefore, the purpose of this paper is to analyze the difference between the 90’s Artificial Intelligence articles and the 2010’s. To do so, this study will attempt to answer following 4 questions:

- What are the most used AWL words in each decade?

- What are the percentages of AWL, GSL, AI (specific) Technical words, and Other Technical words in each decade?

- What are the most used AI Technical words from each decade?

“Word List”, in tool preferences of AntConc will be the key tools that we will be using to answer the questions mentioned above. I also collected AI specific technical words from this link. This way, I can upload it in Tool Preferences (Word List) in AntConccan and run my analysis to calculate their percentages.

In total, we will use 24 articles from the 90’s and 10 articles from 2010’s. The articles are all found in arXiv, which is an open-access archive for over 2 million scholarly articles.

Artificial Intelligence is often shortened to AI, so we will use this acronym in this paper.

In this paper we will seek to answer the 3 main questions, and compare the results with my expectations.

Nonetheless, I would like to make two important observations before we jump to the plan of the paper.

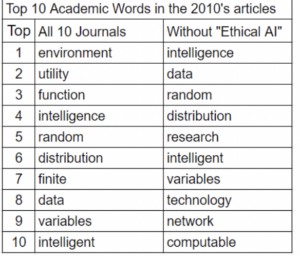

Firstly, I was trying to answer the first question in my proposal (What are the top-10 most frequently used academic words in selected journal articles?) when I made this shocking observation. “Environment” was the top 1 answer for the 10 selected journals from the 2010’s (Table 1). Naturally, I pressed on “environment” to examine how it is used in the journals. To my surprise, 395 out of 404 occurrences of the word “environment” were in one single article: “Ethical Artificial Intelligence” by Bill Hibbard. Thus, I became more curious to quantify how much this one particular article affects the analysis that I am attempting to do. I made some comparisons between analysis results from all 10 articles and 9 articles excluding “Ethical Artificial Intelligence”. First, we will highlight one huge difference, then the similarities after.

As we can see in Table 1, the article “Ethical AI” had a big impact on the top 10 AWL words used. Not only did the word “environment” get out of the chart, but it also almost completely disappeared from the list of AWL words used with only 9 occurrences in all 9 articles. We can also observe that the words “utility” (top 2), “function”(top 3), and “finite” disappeared from the chart while “research”, “technology”, “network”, and “computable” made their appearance. As a result, it would be reasonable to state that “Ethical AI” occupies the first top 3 (and top 7) of the AWL leaderboard.

The term “environment” here does not refer to a physical environment but a virtual (computing) environment. Here are some examples of its use in “Ethical AI”: An AI Testing Environment (Bill Hibbard, p.iv), rewards over many interactions between π and a stochastic environment μ (Bill Hibbard, p.25).

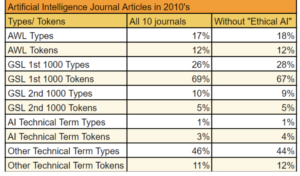

However, there is not much change affected by removal of “Ethical AI” in percentage of AWL, GSL, Technical,

Secondly, I ended up using 24 articles from the 90’s instead of just 10 because of the number of terms that should be covered. My proposal was to compare 10 articles from the 90’s to 10 articles from the 2010’s, but I could not find any articles on AI from the 90’s. For a long time, I tried but was not successful. Because of that, I had the idea to compare the 2000’s and 2010’s instead, but I just could not let go of my initial plan. I refused to believe that Computer Scientists in the 90’s had not written articles about AI. Then,

finally, after more research, I came to realize that most writings on AI in the 90’s did not have Artificial Intelligence in their titles. The reason is because the definition of Artificial Intelligence has changed over the years. Any small machine learning activities of today could be the AI of the 70’s. What the 80’s considered AI might not be the 90’s AI and so on. This is Due to the rapid progress in the field of Computer Science. Thus, many computer scientists prefered to use more specific terms that are more fitted to what their algorithms did. Here are some examples: deep learning, cognitive science, collective intelligence, automatic model generation, multi-agent learning, reinforcement learning, etc. There are many more that I am trying to cover in 24 articles. This is important because they are the building blocks of AI that we know today.

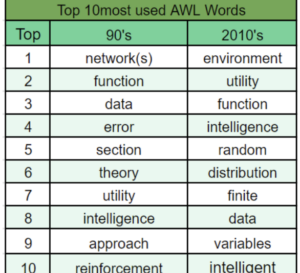

Back to the purposes of the paper. As of the top 10 AWL words used in each decade (see Table 3), some words stay consistent while some words on one side have approximate meanings with words on the other side. On one hand, the words “function”, “data”, “utility”, and “intelligence” figure in the top 10 on both sides. On the other hand, “network”, “error”, and “section” in the 90’s respectively have similar meanings with “environment”, “random”, and “distribution” in the 2010’s. However, we need to look at how they are used in the articles. Take “network” and “environment” for example.

Here are two instances of use of “network” in an article from the 90’s:

“… background knowledge to select a neural network’s topology and initial weights …” (David W. Opitz, p.178).

“… hand, fully connect consecutive layers in their networks, allowing each rule the possibility of adding …” (David W. Opitz)

Here are two instances of use of “environment” in “Ethical AI”, an article from the 90’s:

“… An AI Testing Environment …” (Bill Hibbard, p.iv),

“… rewards over many interactions between π and a stochastic environment μ …” (Bill Hibbard, p.25).

To my understanding, “network” and “environment” could work interchangeably in these cases. Therefore, it would be fair to say that the idea of “environment” persists in the AI field except that the term “network” is used more often than “environment” in the 2010’s.

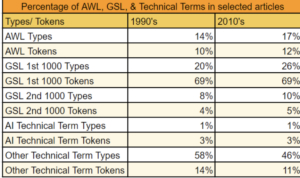

Followingly, I used the Tool Preferences in AntConc to be able to calculate the percentages of AWL, GSL 1st 1000, GSL 2nd 1000, AI technical terms, and Other Technical terms. I also decided to do so for both types and tokens. This can reveal to us more details about the articles. We can observe more similarities than differences in table 4. While the percentages of tokens remain relatively the same, we can remark that the 90’s articles have less percentages of types in AWL and GSL. However, although the 90’s and the 2010’s articles have the same percentage of AI specific technical terms, the 90’s journals have 12% more than the 2010’s in percentage of (none AI specific) technical term types. Consequently, we can deduce that the writers of AI journals in the 90’s used more technical words than the ones in the 2010’s. Plus, from analysis in AntConc, I was able to find out that the 90’s contain 2,662 more technical word types than the 2010’s. This suggests that the 2010’s might not use many terms from the 90’s anymore.

Moreover, not only do the 90’s journals contain 8% less of GSL (1st & 2nd 1000s) types than the 2010’s, but also 3% less on AWL types and 2% less on AWL tokens. As a result, I believe it is fair to say that the 90’s journals are more technical than the 2010’s, and that the 2010’s are more academic.

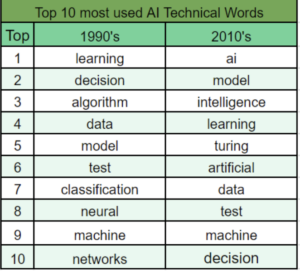

Last, but not least, we can see the list of top 10 most used AI specific (technical) terms in Table 5. Six words are in the top 10 on both sides: “learning”, “decision”, “data”, “model”, “test”, and “machine”.

Overall, the Artificial Intelligence journals in 2010’s are not much different from the ones in the 90’s. However, they are less technical and more academic than the 90’s. I expressed two expectations in my proposal. One is valid, and the other is false. First, I expected that the 90’s articles used many more technical terms that might not be used in the 2010’s anymore. I believe that it is valid to say so after what we discussed concerning Table 4. Second, I expected the articles of 2010’s to be less formal. However, to my surprise, they have more percentage of AWL and GSL 2nd 1000 words both in types and tokens. Therefore, my second expectation would be invalid.

Reference:

Avdeev, Leo V., et al. “Towards automatic analytic evaluation of massive Feynman diagrams.” Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment 389.1-2 (1997): 343-346.

Baxter, Jonathan, Andrew Tridgell, and Lex Weaver. “Knightcap: a chess program that learns by combining td (lambda) with game-tree search.” arXiv preprint cs/9901002 (1999).

Bishop, Chris M. “Neural networks and their applications.” Review of scientific instruments 65.6 (1994): 1803-1832.

Chaves, Max. “Chess Pure Strategies are Probably Chaotic.” arXiv preprint cs/9808001 (1998).

Cohn, David A., Zoubin Ghahramani, and Michael I. Jordan. “Active learning with statistical models.” Journal of artificial intelligence research 4 (1996): 129-145.

Friedman, Nir, and Joseph Y. Halpern. “Modeling belief in dynamic systems, part II: Revision and update.” Journal of Artificial Intelligence Research 10 (1999): 117-167.

Giraud-Carrier, Christophe G., and Tony R. Martinez. “An integrated framework for learning and reasoning.” Journal of Artificial Intelligence Research 3 (1995): 147-185.

Gottlob, Georg, Nicola Leone, and Francesco Scarcello. “Hypertree decompositions and tractable queries.” Proceedings of the eighteenth ACM SIGMOD-SIGACT-SIGART symposium on Principles of database systems. 1999.

Grove, Adam J., Joseph Y. Halpern, and Daphne Koller. “Random worlds and maximum entropy.” Journal of Artificial Intelligence Research 2 (1994): 33-88.

Hakkila, Jon, et al. “AI gamma-ray burst classification: Methodology/preliminary results.” AIP Conference Proceedings. Vol. 428. No. 1. American Institute of Physics, 1998.

Kaelbling, Leslie Pack, Michael L. Littman, and Andrew W. Moore. “Reinforcement learning: A survey.” Journal of artificial intelligence research 4 (1996): 237-285.

Litman, Diane J. “Cue phrase classification using machine learning.” Journal of Artificial Intelligence Research 5 (1996): 53-94.

Murphy, Patrick M., and Michael J. Pazzani. “Exploring the decision forest: An empirical investigation of Occam’s razor in decision tree induction.” Journal of Artificial Intelligence Research 1 (1993): 257-275.

Murthy, Sreerama K., Simon Kasif, and Steven Salzberg. “A system for induction of oblique decision trees.” Journal of artificial intelligence research 2 (1994): 1-32.

Nevill-Manning, Craig G., and Ian H. Witten. “Identifying hierarchical structure in sequences: A linear-time algorithm.” Journal of Artificial Intelligence Research 7 (1997): 67-82.

Nilsson, Nils. “Teleo-reactive programs for agent control.” Journal of artificial intelligence research 1 (1993): 139-158.

Opitz, David W., and Jude W. Shavlik. “Connectionist theory refinement: Genetically searching the space of network topologies.” Journal of Artificial Intelligence Research 6 (1997): 177-209.

Pang, Xiaozhong, and P. Werbos. “Neural network design for J function approximation in dynamic programming.” arXiv preprint adap-org/9806001 (1998).

Safra, Shmuel, and Moshe Tennenholtz. “On planning while learning.” Journal of Artificial Intelligence Research 2 (1994): 111-129.

Samuel, Ken. “Lazy Transformation-Based Learning.” FLAIRS Conference. 1998.

Schaerf, Andrea, Yoav Shoham, and Moshe Tennenholtz. “Adaptive load balancing: A study in multi-agent learning.” Journal of artificial intelligence research 2 (1994): 475-500.

Turney, Peter D. “Cost-sensitive classification: Empirical evaluation of a hybrid genetic decision tree induction algorithm.” Journal of artificial intelligence research 2 (1994): 369-409.

Webb, Geoffrey I. “OPUS: An efficient admissible algorithm for unordered search.” Journal of Artificial Intelligence Research 3 (1995): 431-465.

Wolpert, David H., and Kagan Tumer. “An introduction to collective intelligence.” arXiv preprint cs/9908014 (1999).