An overwhelming problem with the United States population, specifically children, is health and obesity. A key nutrient in the growth and development of children is calcium. Many children, however, do not like plain milk, so parents are forced to resort to feeding their children processed, sugary chocolate milk. Some parents are able to bypass this processed sugary drink, and make chocolate milk on their own simply with milk and a healthier, low sugar chocolate syrup. The issue with homemade chocolate milk is that there is always too much chocolate syrup, and the syrup sinks, or there is too little and it is not “chocolaty” enough. In order to help create an evenly mixed drink, self-stirring chocolate milk mugs have been created, but they do not prevent errors in the amount of chocolate syrup added. By creating a chocolate milk mug that senses the concentration of chocolate in the milk, and reacts accordingly to optimize the chocolate concentration, healthier and more delicious chocolate milk can be made.

The control of the concentration of chocolate milk can be justified due to the increased health benefits from healthier and less processed chocolate syrup. Additionally, by controlling the concentration of chocolate, it prevents the overuse of chocolate syrup, therefore reducing the cost to the consumer.

As the amount of chocolate syrup added by hand never seems to come out right, the homemade production of chocolate milk must be optimized. It also needs to be optimized because chocolate syrup costs an average of three dollars for 24 oz. If chocolate syrup is not measured when it is added to the milk, too much could be detrimental to your pocket. Those couple of cents adds up for the extra syrup that you do not need. Optimizing the amount of chocolate that is put into the milk will save money by reducing the amount of premade chocolate milk purchased and also by not wasting unnecessary syrup. Another reason why consumers benefit from optimizing the amount of syrup inside the milk is because making your own chocolate milk can be healthier than drinking the processed, pre-mixed brands.

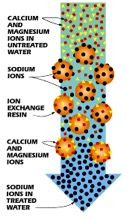

The first step in starting the process of making the best chocolate milk is to identify the variables that are being affected. The control variable for this system will be the concentration via the density of the milk, which will be monitored using a sensor. This sensor measures the density throughout the mixing process, but we are mostly concerned with what the concentration is after the mixing has completed. Since the sensor measures the density of the chocolate milk, it is important to determine what the optimal measurement is. This was determined by creating different concentrations within a certain amount of milk. After a taste test of each, it was determined that the optimal density of chocolate milk is 0.219 g/mL. Because there is bound to be some slight error, the acceptable parameters for the density of homemade chocolate milk is within 10%, or 0.196 g/mL to 0.239 g/mL. Although the control of the concentration of chocolate milk is not critical to the function of the mug, it is quite beneficial to the consumer and our purpose is to always please the customer. The manipulated variable is the amount of chocolate syrup in the milk. This is the manipulated variable because it can be controlled in order to make the best chocolate milk. This is the most practical variable to manipulate because it is what makes the milk taste delicious.

The variables that could potentially disturb the way our system works could include the amount of milk that is placed into the mug, the brand of chocolate syrup, and the type of milk. Different gradients of milk have different densities so the optimal density would need to be adjusted. The chocolate syrup, too, can differ depending on the brand as each brand of chocolate syrup has a slightly different concentration and density of chocolate taste. While the Skinny Moo Mug is extremely reliable, it can under perform at times. Although the chocolate milk could suffer minor quality deficiencies due to disturbance variables, the consumer can be at ease because these disturbance variables should be accounted for in the density readings taken by the concentration sensor. It is recommended that if errors in the chocolate milk taste occur, a spoon be used to ensure complete mixing. In order to tell if any of these disturbances have affected the delectable, scrumptious taste of chocolate milk, a feedback loop would be appropriate. A feedback loop is best because the system is reactive. In other words, the system does not change the amount, or density, of chocolate milk until an error arises. Additionally, a feedback loop provides an explicit response where the density of the chocolate milk can be changed to a predetermined explicit value.

In order to create the optimal chocolate milk, we will be constructing a variation of the “Skinny Moo Mug.” As seen in the YouTube video below, built into the mug is a spinner to ensure equal mixing of the chocolate syrup.

https://www.youtube.com/watch?v=xlX4Tyq-ShY

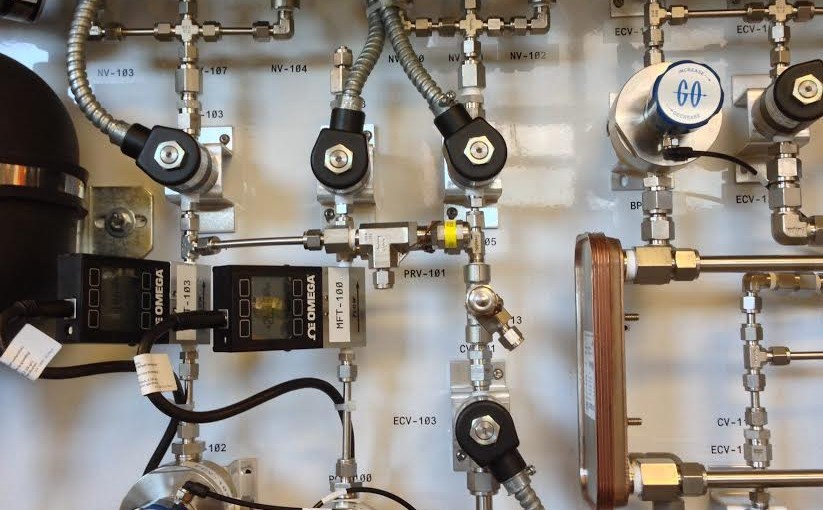

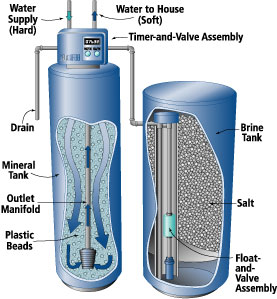

Instead of the standard cap seen in the stock photo, we will be using a patented cap that has an automatic opening and closing flow hole at the bottom. Instead of leaving the whole cap as an open space, we will create a wall on the ledge labeled 14E in the photo below. Creating a wall will ensure that one does not drink solely chocolate syrup when they take a sip of refreshing chocolate milk. Additionally, a hole will be made on the surface labeled 12D in order to allow for one to actually drink out of the mug. The original opening shown in the picture as G, will be used as the opening to the reservoir to add the chocolate syrup. In order to automatically open and close the flow hole labeled 14A, the cap needs to be connected to a concentration sensor.

As the cap already comes with the potential to be connected to a sensor, the only task is to insert our own sensor. The sensor that will be used is called the Microelectromechanical Systems (MEMS) Liquid Density Sensor from Integrated Sensing Systems (as seen on page 5 of http://metersolution.com/wp-content/uploads/2014/08/Micro-liquid-density-sensor-User-Manual-1-1-1-1.pdf). As seen in the photo, the sensor is smaller than a dime, and will be mounted on the side of the wall of the mug. As the liquid is mixed and flows throughout the mug, the chocolate milk flows through the sensor. The sensor will read the density, and therefore concentration of the concentration of the chocolate milk. The MEMS sensor will be pre-programmed to transmit the density data to the receiver that is already in the cap. The receiver will also be pre-programmed to either open or close the flow hole depending on the density sent to the receiver. The mug with the patented cap and density sensor will make for the most delicious, optimal chocolate milk.

References:

http://www.google.com/patents/US8678220

https://www.youtube.com/watch?v=xlX4Tyq-ShY

US08678220-20140325-D00000.png

Where,

Where,