Uranium was discovered in 1789 by a German chemist, Martin Klaproth. Its radioactive properties were not discovered until 1896. Between 1919 and the 1930’s, the components of the atom, protons, neutrons and electrons, were identified. In 1939 Otto Hahn and Fritz Strassmann documented that the nuclear fission process could release large amounts of energy.

Nuclear fission occurs either by a nuclear reaction or by radioactive decay in which the nucleus of an atom splits apart. This discovery of nuclear fission encouraged scientists in some European countries during WWII to pressure their governments to pursue an atomic weapon based upon the Uranium element, due to its high number of neutrons in some of its isotopes and its ability to under fission easily. The continued research in Uranium fission helped understand how the element could be split, with slow moving neutrons and promoted by heavy water. This splitting released energy and formed two additional elements, Plutonium and Neptunium. The discovery that slow neutrons were highly effective in the fission process gave scientists the final piece needed to create a bomb from enriched U-235. The two final conclusions from this discovery were, Uranium could be used to make a bomb, and Uranium could be used as a source of power. Future implications were also considered, which included the use of Uranium as a substitute for Radium for radioisotopes, use as energy for turbines and naval propulsion. The collection of scientists concluded that a Uranium boiler was a promising technology, but not at the current time, due to war.

With the attack on Pearl Harbor, the United States invested vast resources into the development of the atomic bomb. The U.S. Army overtook responsibility to produce plants capable of making fissionable material. By mid-1945 sufficient amounts of Polonium 239 and highly enriched Uranium 235 were produced.

After all military use of atomic power, countries began to develop methods of harnessing this energy into creating steam and electricity. Scientists across the globe recognized the potential for compact, high-energy producing facilities. The first nuclear reactor to produce electricity was the small Experimental Breeder reactor (EBR-1) in Idaho, USA. In 1953 President Eisenhower proposed his “Atoms for Peace” program, which reoriented significant research effort towards electricity generation and set the course for civil nuclear energy development in the U.S.

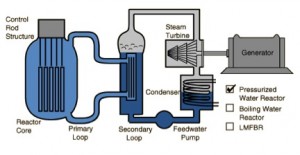

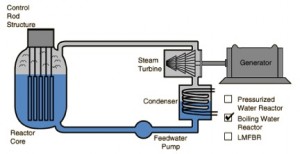

Also in the 1950s, Admiral Hyman Rickover developed the Pressurized Water Reactor (PWR), see image left, for naval submarine use. This technology was then used to create the first fully commercial PWR of 250 MWe, Yankee Rowe, which operated from 1960 to 1992. The Boiling Water Reactor (BWR), see image below, was also developed an implemented in 1960. This was Dresden-1 similarly producing electricity at 250 MWe. Canada use reactors with natural uranium fuel and heavy water as a moderator and coolant while France used a gas-graphite design. Today, 60% of the world nuclear capacity is PWR and 21% is BWR.

The nuclear power industry experienced a decline and stagnation from the late 1970s to 2002. This was due to cost escalation, slower electricity demand growth, and a changing regulatory environment. However, by the late 1990s, the nuclear power industry started a comeback. Increased nuclear power demands stem from the realization of the scale of projected increased electricity demand worldwide, awareness of the importance of energy security, and the need to limit carbon emissions inducing global warming.

Sources:

http://www.world-nuclear.org/info/Current-and-Future-Generation/Outline-History-of-NUclear-Energy/, http://www.treehugger.com/energy-efficiency/us-nuclear-power-decline.html

Co-authors: Ari P. Langman and Matthew Pigott

Editor: Team