One of the key proponents and issues that align with data mining ethics is bias especially among minorities. While the creators of technology rarely begin designing a system with the intention of implementing a form of bias, it is nearly impossible to avoid this notion. Looking at history and the numerous examples of biased technology, a clearer image of the actual effects biased technology can have on people. For example, Ruha Benjamin’s Race After Technology begins by describing an issue that seems trivial. She describes a soap dispenser that fails to recognize darker skin complexions. The engineers of the soap dispensers eventually fixed the issues and assured the public that this was unintentional. Dr. Benjamin uses this technological flaw as an example for larger and more meaningful technologies that also show bias. Looking at bias in these technologies, specifically predictive policing and facial recognition, it becomes obvious that these systems need to be recognized for what they do. While reviewing these issues pointed out in sources such as Ruha Benjamin’s Race After Technology, several documentaries, news articles and papers, we can begin to point out the existing issues surrounding bias in data mining.

In Professor Ruha Benjamin’s lecture at Lafayette College titled, Race after Technology toward anti-racist design, she begins by giving a historical context from Georges Cuvier, a French scientist during the early 1800s. She quotes his views of the white and black races. He wrote, regarding Caucasians, “The white race, with oval face, straight hair and nose, to which the civilized people of Europe belong and which appear to us the most beautiful of all, is also superior to others] by its genius, courage and activity.” And regarding Black people, Cuvier wrote, “The Negro race…is marked by black complexion, crisped of woolly hair, compressed cranium and flat nose, the projection of the lower parts of the face, and the monkey tribe thick lips, evidently approximate it to the hordes of which it consists have always remained in the most complete state of barbarism.” (Benjamin, Race after technology toward anti-racist design). While these racist beliefs are believed to be outdated, Professor Benjamin uses these to reaffirm the unjustness in history surrounding race as well as define the origins or racism. Creators of technology are not immune to biases or the preexisting assumptions about subgroups. These beliefs follow in the form of their creations despite their efforts to make technology neutral. Professor Benjamin demonstrates this by stating “something can be nominally for everyone like a public bench, but the way we design it can have these built-in exclusions and harms. It also gets us to think about how we create a technology to fix a bigger problem.” Her example of a park bench examines how different designs can impact sub groups differently, similarly to an automated soap dispenser that does not recognize certain skin pigmentation. Another example that Dr. Benjamin uses in her book is a beauty contest that is judged by artificial intelligence. “The venture involved a few seemingly straightforward steps…”

“1 Contestants download the Beauty AI app.

2 Contestants make a selfie.

3 Robot jury examines all the photos.

4 Robot jury chooses a king and a queen.

5 News spreads around the world.” (Ruha Benjamin, p.49). The 2016 system collected over 6,000 submissions and of the 44 winners, only one finalist had visibly dark skin. Technologies involving big data can have similarly biased effects as the beauty contest. These similarities between different technologies showing different forms of bias leads to a question that Dr. Benjamin attempts to point out. Is it possible to design robots or

technology to think or act neutrally without bias? Within this question is the question of whose mind is the algorithm modeled on? As stated, it is unlikely that the people who designed the algorithm to judge the beauty contest identify as racists however, the outcome of the contests was undoubtedly biased. In most cases technologies are created for the betterment of society but in the cases shown, they can have unintended biased consequences. The number of people in these examples is relatively small and the effects are comparatively small (yet not unimportant) however when scaled, bias in technology can have an incredible amount of power.

In Algorithms of Oppression: How Search Engines Reinforce Racism, Safiya Umoja Noble makes arguments for technological reform similarly to Dr. Benjamin. In this piece, the goal is to bring to light the biases that are not seen or obvious within technology. For example, an evaluation of google searches shows how certain racist and sexist stereotypes are reinforced. One part of this experiment showed a comparison of two google searches, one which was “why are black women so…” and the google suggestions matching that phrase were “angry, loud, mean, attractive, lazy, annoying” etc… The other search was “why are white women so…” and the google suggestions matching that phrase were, “pretty, beautiful, mean, easy, insecure, skinny” etc. (Safiya Umoja Noble, p.21).

Figure 5. Google results for “why are Black women so…” (Algorithms of Oppression, 2018)

Figure 6. Google results for “why are white women so…” (Algorithms of Oppression, 2018)

Some other google searches showed worse and more graphic forms of racism and sexism which eventually were critiqued and changed by google. Another example showing bias in data, provided by the studies shown in Algorithms of Oppression was the “glitch” in Google’s algorithms in 2015 which tagged African Americans as “apes” or “animals” and lead Google Map searches to the white house when the word “N*gger” was used during the Obama presidency. (Safiya Umoja Noble, p. 6).

Figure 7: Tweet by Deray Mckesson about Google Maps and the White House in 2015, (Algorithms of Oppression, 2018)

These issues were eventually fixed and Google apologized but only after the damage was complete. The author Safiya Umoia Noble states, “I often wonder what kind of pressures account for the changing of search results over time. It is impossible to know when and what influences proprietary algorithmic design, other than that human beings are designing them and that they are not up for public discussion, except as we engage in critique and protest.” (Safiya Umoja Noble, p. 4). Even after these issues are discovered, it still leads back to the larger question of why they exist. Safiya Noble argues that because these issues happen, it shows how sexism and racism are still a part of American society and need to be recognized as that rather than machine error. Algorithms that use data are continuously being made without a solution to this problem and require reformation in order to create equitable systems. Facial recognition has in the past been brought forward as a biased technology but has not had enough attention brought to it that can go through the necessary remediation.

Looking at facial recognition, a form of technology that has shown to have a large effect on people in differing contexts, especially subgroups, we can see a clearer image of the issues that come from bias in data mining as well as some of the responses to those issues or lack there of. Facial recognition is a system that uses biometrics to map facial features from photos or video and then compares those features with known faces to identify a person. Facial recognition, or FR, is a revolutionary technology that can help identify people for medical, legal and mainly, security reasons. The automated system is efficient and is used to prevent crime and terrorism, find missing persons, and has streamlined security and supported medical efforts. FR has also increased concerns about privacy issues and racial and gender bias. In the Technology Assessment Report on Facial Recognition created by the University of Michigan Science, Technology, and Public Policy Program, the authors express their concerns with the power of the technology and the bias that follows it. FR has been introduced into society and recently schools across the globe. With this, “a growing body of evidence suggests that it will erode individual privacy and disproportionately burden people of color, women, people with disabilities and trans and gender non-conforming people.” (Cameras in the Classroom Executive Summary, p. 4). The Technology Assessment Report on Facial Recognition points out the implications of FR in schools specifically. These implications include; exacerbating racism, normalizing surveillance, defining the acceptable student, commodifying data, and institutionalizing inaccuracy. With the growing number of concerns surrounding FR along with growing racial tensions around the globe, more evidence about the consequences of FR has become prevalent.

As issues surrounding facial recognition continue to persist and escalate, the need for recognizing these issues for what they are becomes greater. In the recent documentary, Coded Bias (2020), a MIT Media Lab Researcher Joy Buolamwini discovers inaccuracies in FR when identifying different subgroups, specifically women of color. Joy investigates the origins of FR and its disproportionate accuracies, and pushes for new legislation surrounding FR and other similar technologies. Joy Buolamwini states, “because of the power of these tools, left unregulated there is no recourse for abuse… we need laws.” (Joy Buolamwini, Coded Bias). This documentary seeks to answer two questions: “what is the impact of Artificial Intelligence’s increasing role in governing our liberties? And what are the consequences for people stuck in the crosshairs due to their race, color, and gender?” (Human Rights Watch). In several United States cities, FR has been banned such as Oakland, San Francisco and Somerville, Mass. Other communities fight for regulation and transparency regarding where people may be surveilled in order to protect privacy and civil liberties. Joy Buolamwini is now the founder of the Algorithmic Justice League and has been able to put a hold on different FR software by standing up to Big Tech. A Fast Company article states that “In June, Amazon announced that it was issuing a moratorium on police use of its controversial facial recognition software, called Recognition, which it had sold to law enforcement for years in defiance of privacy advocates. The move marked a remarkable retreat for Amazon’s famously stubborn CEO. And he wasn’t alone. IBM pledged that same week to stop developing facial recognition entirely, and Microsoft committed to withholding its system from police until federal regulations were passed.” (Amy Farley, 2020). FR serves as an extensive example surrounding data mining ethics and proves that newer technologies are behind on government intervention and legislation.

Activists similar to Joy Buolamwini are not common however necessary in today’s world to make change. Virginia Eubanks is a writer, and activist who, similar to Joy Buolamwini, focuses on technology’s disproportionate effects on the poor. In her book Automating Inequality: How High-Tech Tools Profile, Police and Punish the Poor; Digital Dead End: Fighting for Social Justice in the Information Age she discusses “data mining, policy algorithms, and predictive risk models on poor and working-class people in America.” She states, “today, automated systems control which neighborhoods get policed, which families obtain needed resources, and who are investigated for fraud. While we all live under this new regime of data analytics, the most invasive and punitive systems are aimed at the poor.” (Automating Inequality, 2020). Virginia Eubanks and Joy Buolamwini messages to the public are considered extremely eye opening. Books, articles, documentaries and even movements are necessary to call out these issues which in the past have been widely ignored. Today, recent activists are attempting to “defund the police” which is a shorter slogan which stands for a reallocation of funds to support social programs that can better help the public in certain circumstances compared to a police officer. As mentioned, facial recognition is a technology used for security and policing however there are many more technologies in policing that have shown disproportionate effects on certain subgroups.

When looking at biases in security, high-tech policing serves as a prime example of a technology that has deviated from its original intent and has led to targeted subgroups, and in some cases, has subsequently increased police brutality. As mentioned, facial recognition serves as an example of one of these policing technologies along with cell-site simulators, which can pinpoint cell phone locations and intercept phone and text messages. Automatic license-plate readers, which take pictures of license plates, store them in a database and able police officers to track vehicles. Z-backscatter vans, which are basically an x-ray machine that can see through vehicles and buildings. Closed-circuit television cameras, which allow 24-7 surveillance. Body cameras, which are meant to hold police officers accountable. Social media monitoring, which allows police to locate people based on posts, keywords, hashtags or geographic location. And lastly, a policing technology that has led to massive amounts of controversy, predictive policing software. Predictive policing software is an “algorithm that analyzes past crime data and other factors to forecast risk of future crime.” (High-Tech Policing, Barbara Mantel). In CBSN’s Racial Profiling Documentary Racial Profiling 2.0, predictive policing software such as PredPol (short for predictive policing) and Laser are analyzed for their disproportionate affect on subgroups. The documentary is focused in South LA which is under LAPD jurisdiction who polices a population of 4 million. South LA has a crime rate 17% above the national average and predominantly consists of underprivileged communities that are made up of 98% minority groups. Throughout the documentary, the LAPD emphasized that the goal of PredPol and Laser is to reduce and prevent crime however the documentary shows that when historical data that has shown to be biased is used to direct police towards underprivileged communities, it creates a feedback loop that strengthens the bias in data. One of the software’s used by the LAPD, Laser, which is a form of predictive policing that ranks individuals on likelihood to commit crime, was highly controversial and dissolved in 2019 by a LAPD internal program after the LAPD was sued by South LA community members. While PredPol is still highly controversial and accused of hardwiring systemic issues into policing technology, it is still used today by the LAPD. At the end of Racial Profiling 2.0, a statement from the LAPD was shown. It stated, “the LAPD is moving to a data driven – community focused model of building trust and reducing crime… PredPol will continue to be utilized as a strictly location based strategy for property crimes. No aspect of Laser will be used in either model.” Whether the benefits outweigh the costs of these technologies are highly controversial however there are undoubtedly ethical issues surrounding them. This issue in particular, correlates with the Black Lives Matter Movement as ethnic communities are being targeted by these technologies. The public however is mainly unaware of the ability of these technologies as well as their uses which disproportionately affect subgroups.

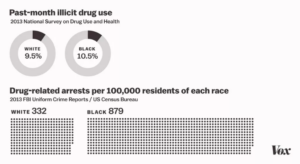

Figure 8: Graphic showing the relationship between past-month illicit drug use and drug-related arrests per 100,000 residents of each race. (There are huge racial disparities in how US police use force, 2018)

Racial Profiling 2.0 also provides statistics that illustrate these ethical issues surrounding bias in data. The statistics from a 2008 Yale Law study found the following; for every 10,000 residents, 3,400 more black people are stopped than white people. Black people are 127% more likely to be frisked than whites. Frisked black people were 24% less likely to be found with a weapon than white people, and black people were 24% less likely to be found with drugs. A 2011 study also found that in New York, the number of police stops were; black 350,743, latinx 223,740 and white 61,805 which makes black people nearly six times more likely to be stopped and frisked by the police compared to white people. (Racial Profiling 2.0, 2020)

As seen from the statistics above, bias does exist and is being reinforced by technology. 10 years ago, it would have been an unbelievable idea that algorithms would have the power they do today. Especially now, during unprecedented times when people are working from home, learning from home, and not seeing people face to face, our reliance on technology has grown exponentially There are no easy solutions to these issues however because of the efforts of Ruha Benjamin, Safiya Umoja, Joy Buolamwini and Virginia Eubanks, people are becoming more aware that bias exists in technology. As seen, biased technology exists in job applications, housing applications, credit card companies, facial recognition, predictive policing and nearly any software that uses big data. The understanding and awareness that these issues exist within the invisible world of big data is one of the most important things that can help resolve some of these issues. Most of these systems are already in place and are widely used despite that they require reform to avoid the disproportionate effects that follow them. Policy change has shown effectiveness however as time passes, newer forms of technology will continue to show bias unless the issue is resolved from it’s route. The benefits of reforming education will allow students to create future technologies for a more equitable future, without systemic racism and inherent biases framing big data. Without approaching these issues from an educational side, government intervention will continue to attempt to resolve future technologies that will continue to show bias in the future if the designer’s awareness of these issues is not made clear.

For more information on political influences and privacy in data mining please click here.