The internet has done incredible things for humanity, it connects us in ways we never imagined and it’s revolutionizing nearly every aspect of our lives; but there’s a cost to using the internet which comes in the form of privacy. Once your personal information is out there you lose some amount of control over it, and it may be used unbeknownst to you in unexpected ways. A Pew Research poll shows that 59% of Americans have little to no understanding of how their data is being used and 79% have privacy concerns about their information being collected (“Americans and Privacy”, 2019). In this digital age with everything moving online, over 60% of Americans feel that they can’t live their daily lives without being tracked by corporations and 81% feel like they have little to no control of their data once it’s online (“Americans Complicated Feelings about Social Media in an Era of Privacy Concerns”, 2018). The explosion of big data and companies’ interest in exploiting it for profit is the underlying issue. This “surveillance capitalism” dubbed by Harvard professor Shoshana Zuboff along with being immoral, is eroding our very individualism and free will (Laidler, 2019). Companies operating under surveillance capitalism have strong economic incentives to take private personal data and then sell it to third parties as “prediction products”. Ultimately, as Professor Zuboff explains, this means not only amassing huge amounts of our data, but actually changing our behavior (Laidler, 2019). Nowhere is this more prevalent than in the context of social media.

There are over three billion social media users today (Dean, 2020). All of these people had to use personal information such as their name, age, gender, and geographic location just to create an account and get onto the platform, not to mention the trillions of posts, retweets, and pictures that follow (Antcliff, 2017).

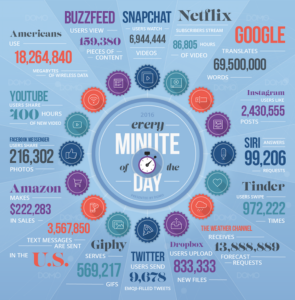

Figure 14. Social media data produced per minute (Cornwall SEO, 2017)

Social media companies are sitting on a massive pile of data, so what do they do with it? First, it’s important to recognize that these are all for profit companies and their business model necessitates a revenue stream. Since it’s free to sign up, they have to find other ways to make money. As stated in The Social Dilemma, “If you’re not paying for the product then you are the product”. These companies make money off the monetization of our data, whether we’re aware of it or not. One of the most common ways they do this is through ads, which accounts for nearly the entirety of many social media platforms’ revenue stream (Lyengar, 2020). They can’t just display any old ad however; you’d never click on a Brooks Brothers suit if you’re a fifteen-year-old girl. In order to be effective, the ads must be targeted, meaning they have to apply to you, or a generic profile of someone with your characteristics and interests. These ad companies have to know what their customer base is, so they pay social media companies for user data, direct them where to place their ads, and then reap the benefits of increased sales and interest in their product. In this sense, social media companies and advertisers control, to a certain extent, what you interact with on your screen. Facebook specifically has come under scrutiny for “selling” user data, but they have argued against this saying they only sell a user’s IP address which protects the anonymity of the individual. (Leetaru, 2018) This is not quite true, however; while Facebook may not be selling a full profile of a user, the ad company certainly knows a lot about a person who clicks on their ad through Facebook since the ad was targeted for users with certain characteristics. If that’s not concerning enough, there are plenty of ways a third party can re-identify an individual from an IP address given the vast amount of online data. (Leetaru, 2018). Even when given just a number, any number of companies can build together a profile and know more about you then you’d probably be willing to let on if you were approached by one of their employees in the street.

The Social Dilemma depicts our relationship with social media in a dark light. In the Netflix documentary, humans are akin to lab rats with the social media companies as puppeteers behind the scenes pulling all the strings. The portrayal is more realistic than some may think. As mentioned before these companies need revenue mainly through ads, and the only way they can get it is if their users spend time on their platforms. It is in their economic interest therefore, to maximize the amount of time spent on, and interaction with the social media site. The Social Dilemma attempts to show that these social media companies do not have your best interest at heart, but in fact their own. The audience watches as the nameless employees representing social media manipulate the user’s experience on the app and by extension their emotions in real life.

Figure 15. A still from The Social Dilemma (The Quint, 2020)

It was disconcerting that these employees seem to know everything about the person and exactly what content will elicit the emotional response they are looking for. One of the issues the documentary was trying to show was how these social media companies subtly change our behavior and ways of thinking over time as they influence what information we are exposed to and interact with. This goes back to the surveillance capitalism Professor Zuboff described. All companies with an interest in user data, not just social media ones, have an economic incentive to gather our personal information and subtly influence our behavior towards the most profitable outcomes for them. This brings to mind questions of free will and human autonomy. If these companies are influencing our behavior by putting in front of us what they want us to see, is that behavior our own choice, or theirs? Professor Zuboff believes that this system is not only an existential threat to democracy because it erodes our individualism; but it also makes our economy inefficient due to asymmetric information as these companies know all about us but we know next to nothing about them. (Laidler, 2019).

Social media companies especially have been coming under increased scrutiny for failing to protect people’s privacy, but have there been any real progress? Facebook fired back at The Social Dilemma criticizing its depiction of how social media companies work. They adamantly deny that we are the product, but also maintain that targeted ads are an essential source of revenue. (“What The Social Dilemma Gets Wrong, 2020) They also described an updated privacy agreement with the FTC which ostensibly seems like a step in the right direction; but in fact critics say that “the FTC tinkers around the edges, but largely allows Facebook to police itself, which it has consistently shown it is incapable of doing.” (Newman, 2020). If social media companies can’t guarantee our privacy, can we take matters into our own hands? Realistically, there’s not much we can do once our data is out there. While it’s important to recognize the different online privacy settings and tailor them to your personal preference, it’s hard to do so when the majority of Americans don’t understand them. A Pew Research poll showed that while 97% have been asked to agree to privacy policies, only 20% of adults either sometimes or always read them (“Americans and Privacy, 2019). Who would want to take an hour to read boring, esoteric legalese anyways; and even if you did read through it all, would you even understand it? Once people are aware of what’s going on behind the scenes, however; they have been shown to reject these practices and are more reluctant to share their personal data online (Swant, 2019). It is possible that through education, people will have a better understanding of how little privacy they are offered and as a result, distance themselves from these platforms. Companies will be forced to respond to this drop in users by hopefully guaranteeing to protect an individual’s information, or at least to be more transparent with how their data is used. Another popular solution is an increase in federal regulation. Companies who are bound by external rules will be forced to comply with higher privacy standards or else face a hefty fine or some other sort of punishment. One thing they talked about a lot in The Social Dilemma was how the business models of these companies necessitates having us as the product. In order to better protect our privacy, they argue, companies must change the way they are structured and realign their priorities to bring value to their customers. To do this we could tax data collection and distribution so companies would have a financial incentive not to endlessly amass our personal information.

There is no silver bullet that will solve the problem of online data privacy. The absence of comprehensive and authoritative regulations, the problematic business models, and lack of transparency all contribute to the underlying issue. To start, we can educate individuals on the system of surveillance capitalism and the practices of these companies; but more will be required if we hope to be truly protected online. In our digital age as technology advances, this problem will only become more vital and prevalent.

For more information on our educational review please click here.