Some details of the system are provided here, but a more complete report will be provided in an upcoming magazine article (currently under review in IEEE AESS Magazine).

Background

This project was inspired by some of our meetings in the N85 Aerospace Club starting around 2016, when I was serving as a content expert for the club. As we were making plans for our next generation of flight simulator (which eventually became the “Dynamite Room”), Shawn Sheng (one of the student members) was adamant that we should pursue a virtual reality (VR) based simulator. He was convinced that this was the future of flight simulation, and that a multi-screen system would quickly become dated. Although we all agreed that VR was pretty cool and gave you an immersive experience, there were concerns that there would be no “feel” to the controls, meaning the system would be useless for real flight training. We eventually scrapped the VR idea and made a full instrumentation type simulator with wrap-around displays. But, those meetings got me thinking about ways you could use VR without sacrificing the feel of actual controls.

In 2017, after playing around with an inexpensive Dell Visor headset and USB camera under Linux, I found that modern PCs and GPUs were fast enough to allow camera video to be processed and rendered on the headset display in near real time. My idea at that time was to do a split-screen type display with flight simulator video on the top and see-through video from the camera (the cockpit) on the bottom.

In 2018, I hired Zhengxie Hu, a student researcher in the Lafayette EXCEL program, to investigate what we called an “Artificial Reality” flight simulator (the correct term was augmented reality) that combined real-time video of the cockpit with flight simulator scenery. Zhengxie looked at the feasibility of detecting markers on the edges of the instrument panel in real time, which provided (1) information on the real-time position and pose of the pilot’s head, and (2) the position of the window areas where scenery should be rendered on the headset display. Using OpenCV and ArUco tags, we were able to prototype several aspects of the final system.

In 2019, I hired Daniel Carroll (also a summer research student in EXCEL) to continue the work started by Zengxie, but to use a more capable HTC Vive headset that we had access to. Daniel was able to identify and start some simple VR-based programming using the OpenVR library. He also convinced me that leveraging the built-in localization capability of the headset would work much better than trying to estimate pose from ArUco tags. In our discussions, we also came up with the idea of green-screen (or chroma key) detection, that would allow more seamless combination real controls and VR imagery. Based on initial promising results, we decided to buy our own HTC Vive Pro headset, where we hoped the stereo camera could be used for the see through video. Unfortunately, the HTC Vive stereo camera is only 480p, which was too low provide useful cockpit imagery.

While on sabbatical in 2019-2020, I was able to take several of the components developed by the students and integrate them together into a working proof-of-concept version of the AR simulator that demonstrates both the geometry-based and green-screen-based overlay techniques for flight simulation. Using the latest PC hardware and a mid-level Nvidia RTX-2060 GPU, the system is able to provide realistic flight simulation at 60 FPS.

Hardware Components

The basic hardware components of the system are listed below:

- HTC Vive Pro headset for viewing and head tracking

- Ovrvision Pro stereo camera for real-time cockpit video

- PC with Ryzen-7 2700x CPU and 32 GB RAM

- NVIDIA RTX-2060 GPU

- Cockpit controls (rudder pedals, control stick, throttle quadrant) from various suppliers.

- Green screen and stands bought from vendors on Amazon.

Total cost of the hardware was around $4000, which is quite low compared to many commercial flight simulators, especially those providing an immersive experience.

Software Components

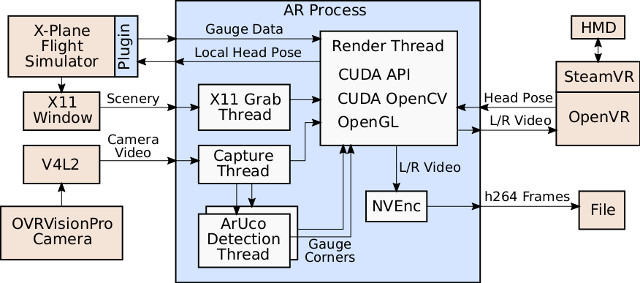

A high-level diagram of the system software components is shown below:

Briefly, the system consists of the following software components:

- X-Plane Flight Simulator: Scenery and simulation of the aircraft dynamics.

- Video4Linux2: An API for reading frames from the Ovrvision camera at 60 FPS.

- OpenVR: An API for head tracking and displaying video on the HTC Vive Pro. Requires SteamVR underneath.

- AR Process: A custom program that was written to implement the AR flight simulator.

The basic operation of the AR Process program is as follows. Video is captured from the stereo cameras mounted on the headset, coincident with video frames that are grabbed from an X11 window from the X-Plane flight simulator. The camera video (cockpit) and flight simulator video (scenery) are then combined in the Render Thread, which works one of two ways:

- Geometry-based Overlay: A 3D model of the cockpit windows is used to know where to draw camera and flight simulator video.

- Green-screen Overlay: A green screen is placed behind the cockpit controls, allowing fairly automatic detection of cockpit regions and scenery regions.

A detailed flowchart of the various processes required for operation of the AR flight simulator is given below (click for larger view):

Source Code

Since it is difficult to provide all details of the algorithms, we are providing prototype source code of the AR flight simulator under the terms of the MIT license. Basically you are free to use this code however you want, but you must give credit to its authors.

ar_flight_simulator_0.1_source.zip

Demo Videos

Thanks to NVIDIA’s NVEnc functionality in their RTX GPUs, video from the AR simulator can be encoded in real time and stored on disk. I have a few videos of using the flight simulator to fly a simple takeoff and landing pattern.